Author: priyanka

Top Quantum Computing Companies to look out for in 2020

Top Quantum computing companies to look out for in 2020

SHARE

Quantum Computing was the unexpected highlight of India’s Union Budget 2020, when Nirmala Sitharaman, the Finance Minister, allocated INR 8000 crore towards the National Mission on the applications of Quantum Computing in India.

India also has a major interest in Quantum Computing. Therefore, we have enlisted the top Quantum Computing Companies to look out for in 2020.

- Google Research

Google Research has opened a Quantum Artificial Intelligence Lab with the help of a joint collaboration among the NASA Space Research Association, whose goal is to pioneer research on how quantum computing might help with the help of machine learning and some other computer science problems.

- IBM

IBM is a trusted partner to help you start the journey of quantum computing and prepare for the modern era of quantum advantage. You can discover some of the use cases, equip your organizations with some of the practical knowledge of quantum skills, and access world-class expertise and technology to advance in the field of quantum computing.

IBM Quantum helps you to be the primary to explore, benefit, and outline from the chop-fast approaching age of quantum computing. As a basically completely different manner of computation, quantum computing might doubtless remodel the companies and societies.

- Intel

Intel, which is known as one of the best technology companies across the globe, has invested around $50 million in funding to QuTech in the year 2018. During the CES Conference in 2018, Intel also revealed that it had created a 49-QuBit chip called Tangle Lake, one of the most significant innovations ever made in the development of Intel Chip.

- Microsoft

Microsoft, which is another most prominent market leader in the ecosystem of quantum computing. The team of Microsoft has started exploring the benefits of quantum computing in 2018. Moreover, they focused on the design of solutions for use in a fault-tolerant and scalable computing environment. In 2019, Microsoft had revealed that it was researching the area of topical computing. This helps to improve the engineering of quantum states. Microsoft has also made some of the significant steps towards improving the development layer of quantum computing.

- Alibaba Cloud (Aliyun)

Alibaba Cloud, in partnership with the Chinese Academy of Science, has invested in the Quantum computing research by the establishment of Alibaba Quantum Laboratory. They are leading the race of quantum computing in China. Their areas of R&D include the quantum processors, quantum algorithms, and quantum systems.

- D-Wave Solutions

D-Wave Solutions is a company that is specializing in the delivery and development of quantum computing systems. Its quantum computers are highly designed to solve the problems of machine learning, optimization, and sample problems that have information volumes and complexity that overwhelm the ability of conventional computers to derive timely, accurate, and actionable knowledge.

- Lockheed Martin

Lockheed, in collaboration with the University of Southern California, founded the Lockheed Martin Quantum Computation Center. The center is highly focused on harnessing the power of adiabatic quantum computing, in which the issues are encoded into the lowest energy state of a physical quantum system to find the optimum answer to some of the specific questions with many variables.

- Honeywell

Honeywell is one of the significant pioneers in the field of the quantum computing landscape. The company started its adventure in the year 2014 at the time of practicing in an advanced intelligent research project to investigate the latest technology.

Honeywell focuses more on a concept called trapped ion quantum computing. This approach uses the ion suspended in space to transmit information with the help of movement of those ions.

- Rigetti

Rigetti Computing is a full-stack quantum computing company that develop and manufacture superconducting quantum integrated circuits and package and deploy those chips in a low-temperature environment, and build control systems to perform quantum logic operations on them.

- IONQ

IONQ is a world-leading general-purpose quantum information processor. The unique trapped ion approach, a combination of perfect qubit replication, unmatched physical performance, optical networkability, and highly optimized algorithms to create a quantum computer that is powerful as well as scalable and that will too support a broad way of applications across different segments and industries.

- Amazon Braket

Amazon Braket is a fully managed service that helps you to get started with quantum computing by offering a development ecosystem to design and explore quantum algorithms, run and test them on simulated quantum computers on your choice of different quantum hardware technologies.

- Baidu

Baidu has launched its own Institute for Quantum Computing dedicated to the application of Quantum Computing software and Information Technology.

Professor Duan Runyao headed the Baidu Quantum Computing Institute, who is also the director for the Quantum Software and Information at the University of Technology Sydney.

At the time of launch, Professor Duan revealed that his plan is to make Baidu’s Quantum Computing Institute into a world-class institution within the time period of five years. In the coming five years, it will integrate Quantum Computing into the Baidu business plan.

- Toshiba

Toshiba offers a Toshiba’s quantum key distribution system that delivers digital keys for cryptographic applications on fiber-based computer networks.

Moreover, in the year 2015, the company announced that genome data from Toshiba’s life Science Analysis center was slated to be encrypted by a Quantum communication system.

- 1QB Information Technologies

1QBit is a software company for quantum computers which is having its specialization in developing applications for the finance and banking industry, working with industry partners it produces custom solutions to solve real-life problems exhibiting high degrees of computational complexity.

- Accenture

Accenture Labs is working on the quantum computing ecosystem and collaborating with leading companies such as 1QBit and Vancouver. The company platform enables the development of hardware-agnostic applications that are compatible with boost quantum and classical processors.

SHARE

RECENT POSTS

-

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR -

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR -

Emerge 2020 HR Tech Summit12 Jul 2020 PR

Emerge 2020 HR Tech Summit12 Jul 2020 PR -

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles -

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

How is Blockchain Revolutionizing The BFSI Sector ?

How is Blockchain Revolutionizing the BFSI Sector ?

SHARE

Blockchain technology drives tremendous changes across the BFSI industry. The decentralized financial services have brought up huge improvements to the centralized banking system.

So, let’s have a look at some of the major use cases of the blockchain technology in the BFSI sector:

- Know Your Customer (KYC)

Banking and financial institutions are highly concerned about the increasing costs that they have to bear to comply with the KYC. It is a lot of time-consuming processes; and it has to be performed individually by all banks and money based institutions. Nowadays, the banks need to upload the customer KYC data into a central repository to check the information of an existing or new customer.

With the help of a blockchain technology system, independent verification of each customer by one financial organization can be accessible for other organizations so that the one does not have to restart the KYC process again.

- Auditing and Bookkeeping

Standardizing the financial statement with the help of blockchain technology allows the auditors to verify the most crucial data easily and gradually. This decreases costs and even helps to save a lot of time. Blockchain technology makes it much more possible to prove the integrity of electronic files.

- Smart Contracts

The smart contract is a series of code, which runs when certain conditions written on it are completed.

With the help of smart contracts for banking, financial transactions help increase the speed of the complex processes. This also helps to make sure the accuracy of transferring the information as the transaction will be approved only if all the written conditions of the code are met.

- Digital Identity Verification

Identity verification is a significant part of each and every transaction that is made with your debit card or at your local bank branch. Your identity is indirectly linked to your assets.

- Fraud Reduction

The involvement of money in any circumstances increases the chances of fraudulent activities. Around 40% of the financial bodies are susceptible to heavy losses relating to economic crimes. The reason behind this can be the usage of a centralized database system for both the money and operations management. Blockchain is a secure, non-corruptible technology that works on a distributed database system. Therefore, there is no such chance of a single point of failure. All the transactions are stored in the form of a block with the help of a cryptographic mechanism that is very difficult to corrupt. Using blockchain technology can help to eliminate the cyber-crimes and attacks on the financial sector.

- Syndicated loans lending

There is one area of banking where the multiple institutions have come together to form consortiums to facilitate blockchain. Credit Suisse is one of those 20 big institutions, which are as of now working towards putting syndicated lending on the blockchain with the distributed ledger technology, more commonly known as the blockchain technology. As of now, this is an area that is still quite behind in terms of technology used. Fax communications, large delays in getting loans, and other hurdles are faced at the time of making and processing syndicate loans.

- Global payment options

Blockchain’s latest technology is internet-based and does not need any of the specific setups of operating users can access the data and conduct different transactions across the globe using their account public and private keys.

- Blockchain security

The first priority for any financial institution is in the area of security and privacy. And given the right and popular security patterns blockchain holds, most banks are obviously going to use this in storing assets that are of extreme value.

- Blockchain with AI

Banks do have a lot of tasks to process daily. And given that blockchain is now incorporated with Artificial Intelligence, most financial institutions will certainly want to use this because it will help in reducing their work stress and the rest. This will cover tasks like data storage, data monetization, and so on.

To Sum Up

This unique technology offers the banking industry with many unique opportunities, but certain challenges must be overcome for noticeable impacts to occur in the banking sector.

More so, the need for regulatory functions and oversight needs to be highly addressed by some of the relevant authorities. The financial sectors are synonymous with huge amounts of data. Moreover, data scalability must be sorted out prior to deploying blockchain in the financial sector.

In summary, blockchain can impact and revolutionize the banking financial sector. The only thing needed is its right application and feasibility.

SHARE

RECENT POSTS

-

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR -

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR -

Emerge 2020 HR Tech Summit12 Jul 2020 PR

Emerge 2020 HR Tech Summit12 Jul 2020 PR -

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles -

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

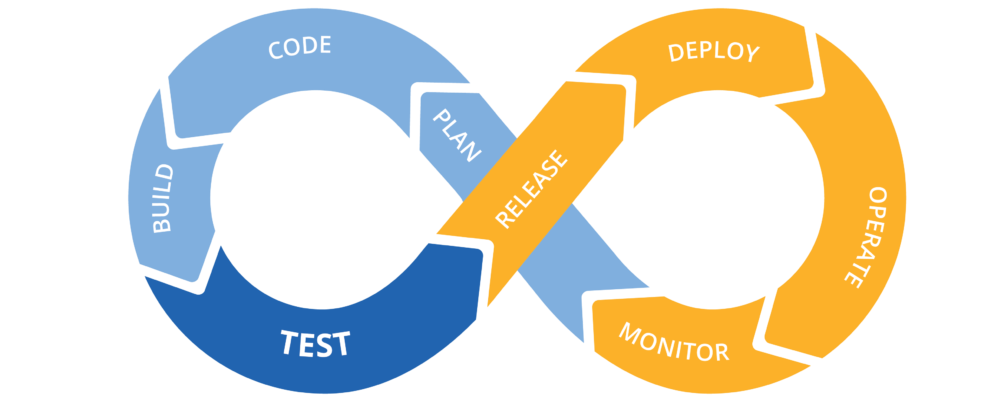

5 common misconceptions about DevOps

5 Common misconceptions about devops

DevOps is a transformative operational concept designed to help the development and production team coordinate operations more effectively and efficiently. In theory, DevOps software is designed to be more focused on cultural changes that stimulate collaboration and efficiency, but the focus often ends up being placed on daily tasks, distracting organizations from the major core principles – and value – that DevOps is built around.

This has even led to many technology professionals developing misconceptions about DevOps because they have been part of the deployments- or know people who have been involved in DevOps plans – that strayed from the core principles of the movement.

This struggle is understandable. Some of the cultural changes are abstract measures that, when delivered effectively, lead to process innovation and significant value creation. That is the aim of DevOps. Moreover, not everybody will buy into cultural adjustments and be comfortable with the idea of adjusting how they work. This even leads organisations to respond with strict procedural guidelines that sometimes detract from the results of movements like DevOps.

So, here are some of the common misconceptions that emerge from this kind of environment include:

- DevOps Doesn’t Work With ITIL Models

The IT infrastructure library creates seemingly rigid best practices to help organizations create stable, controllable IT operations. DevOps is built around creating a continual delivery environment by breaking down the longstanding operational silos. It would even seem that these two principles are contradictory, but ITIL gets a lot of bad press that it does not deserve. The truth is that ITIL features lifecycle management principles that align naturally with what organizations are trying to achieve through DevOps.

- ITIL is too Rigid for DevOps

The core process models of DevOps can work easily, but some people think that even if you can make the marriage work, it will fall apart because ITIL is so rigid, and DevOps focuses on giving users the flexibility to work in whichever way works best for them. There may be some of the major conflict here, but ITIL has been changing in recent years.

- DevOps Emphasizes Continuous Change

There is no way around it; you will need to deal with more change and release tasks when integrating DevOps principle into your operations, the focus is placed heavily on accelerating deployment via development and operations integration after all. This perception comes out of DevOp’s initial popularity among web app developers. DevOps for Dummies explained that most businesses will not face the change that is so frequent, and do not need to worry about continuous change deployment just because they are supporting DevOps.

- DevOps Forces Developers to Bailout Operations Teams

This misconception is often the result of the DevOps deployments that don’t go so well. If your DevOps tools end up building down to production teams complaining that an app is causing compatibility issues and needs to be changed, you are not really doing DevOps correctly. The goal is to get both teams to work constructively to build applications with the production, configuration in mind, and bring development-related knowledge into the final push to release.

- DevOps Eliminates Traditional IT Roles

If in your environment of DevOps, your developers suddenly need to be good system admins, change managers, and database analysts, something went wrong. DevOps, as a movement help to eliminates traditional IT roles, will put too much strain on workers. The objective is to break down collaboration barriers, not ask your Developers to do everything. Specialized skills play a major role in effective support operations, and traditional roles are valuable in DevOps.

RECENT POSTS

-

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR -

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR -

Emerge 2020 HR Tech Summit12 Jul 2020 PR

Emerge 2020 HR Tech Summit12 Jul 2020 PR -

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles -

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

How AI is being used globally to fight coronavirus?

How AI is being used globally to fight coronavirus?

As of 10th April, 6500+ confirmed cases had been reported in India. While the government across the globe are working in collaboration with the local authorities and health care providers to track, respond to and prevent the spread of the disease that is being mainly caused by the coronavirus, health experts are turning to advanced analytics and AI to augment current efforts to prevent further infection.

Data and analytics have proved to be useful in combating the spread of the disease, and the federal government has access to the ample data on the India population health and travel as well as the migration of both the domestic and wild animals – all of which can be useful in predicting and tracking disease trajectory.

Machine learning ability to consider a large amount of data and offer insights can lead to a deeper knowledge about the diseases and enable India health and government officials to make better decisions throughout the entire evolution of an outbreak.

Government health agencies can use AI technology in four ways to limit the spread of the COVID-19 and some other diseases:

- Prediction

As the global human population grows and continues to interact with animals, other opportunities for the viruses that originate in animals could make the jump from humans and spread. India has seen this in recent years, from the recent SARS and MERS viruses to new firms of the flu and even in the 2018 Ebola crisis in West Africa, where it was founded and discovered that the Ebola outbreak resulted from a toddler interacting with bats in a tree stump.

Government and public health officials can use this data to be proactive and take steps to prevent these kinds of outbreaks – or at a minimum, do a better job preparing for them.

- Detection

When any of the unknown viruses make the jump to humans, time becomes a precious resource. The quicker a disease outbreak is detected, the sooner action can be taken to stop the spread and effectively treat the infected population. AI can help here, as well.

- Response

After a disease event is identified, making informed decisions in a timely manner is critical to limiting the impact. AI can integrate travel, population, and disease data to predict where and how quickly a disease might spread.

Additionally, using AI to predict disease spread, it can improve the application of current treatment and accelerate the time it takes to develop new treatments. Radiologists are also using the AI deep learning – machine learning systems that learn from experience with large data sets, to make better treatment decisions based on medical imaging.

- Recovery

Once an outbreak is contained or has ended, governments and global health organizations must make decisions about how to prevent or limit outbreaks in the future. Machine Learning can be used here too by simulating different outcomes to test and validate policies, public health initiatives and response plans.

Moreover, AI permits policy makers and health leaders to conduct a host of “what-if” analyses that will enable them to make data-driven decisions that have an increased likelihood of being effective.

RECENT POSTS

-

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR -

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR -

Emerge 2020 HR Tech Summit12 Jul 2020 PR

Emerge 2020 HR Tech Summit12 Jul 2020 PR -

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles -

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

Top DevOps Trends that Will Matter the most in 2020

Top DevOps Trends that Will Matter the most in 2020

With every passing year, our understanding of DevOps deepens – leading to new techniques, tools, and processes for bringing developers and IT operations closer together. And, as security continues to become more tightly integrated with development and lifecycles and operations workflows. But as we head into 2020, our sentiments are always changing, so it is a great time to reflect on the way people are looking at DevOps, popular trends, and common questions coming up across the industry.

So, here are some of the DevOps Trends that Will Matter the most in 2020:

- Agile and DevOps will increase strategic collaboration between technology and business functions

Agile and DevOps are grassroots movements that started within the latest technology. In many cases, moreover, Agile, and DevOps have not been able to break out of the technology. On the other hand, Agile has been adopted in some other functions, including human resources, procurement, finance, and marketing.

Moreover, this does not seem to have helped the technology community join forces with their colleagues in these other functions. Just because of the competitive pressure digital is putting on organizations, we will start to see more collaboration across functions with agile as the conversation starter!

To speed this up, encourage your teams to talk to people from different functions about their experience with the help of agile methods. Questions that can help break the ice include the How are you doing agile? What are you doing? What is changing for you? What issues are you facing? How could we work together to help to address some of these issues? These questions will help people from different functions get to know each other as people and coloration will improve.

- Learning, training and improving DevOps skills will become an organizational priority

DevOps requires trying out some of the new latest technologies. Recent research revealed that around 50% of survey respondents prefer to hire into their DevOps teams from within their organization. Moreover, many companies do not have the necessary skills to do this, and hiring new people might not be possible due to the budget restraints.

One approach is to create an internal training university. This is what the courier delivery services firm FedEx did. The company knew it did not have proper skills in its talent pool of engineers, leading its CIO to initiate the FedEx Cloud Dojo, which teaches its own engineers modern software development and latest technologies and functions as a university for FedEx. The University has reskilled more than 25000 software programmers.

Organizations that want to use the DevOps to help advance their digital transformations must make drastic improvements in training, improving, and learning skills that are essentials to DevOps.

- Both the Upskilling and cross-skilling will lead to the rise of the T-shaped professional

Recognizing the strained talent market, organizations and individuals will invest heavily in upskilling and cross-skilling in order to meet accelerating demand for new skills. While all the IT professionals will need to become more cross-domain competent, developers, in particular, will have to add new breadth to their skills portfolio in areas such as containerization, AI, infrastructure, testing, and security.

There will also be a stronger emphasis on core skills such as customer experience, empathy, and collaboration. Silos are starting to come down in many areas, and the need for everyone to become T-shaped with the depth and breadth of knowledge will become necessary to enable and support innovation.

- Tool fatigue will worsen before it gets better

The number of tools and frameworks in technology is daunting. The challenges IT team face to understand, interconnect, and apply much of these will continue, and in 2020, there is no real resolution in sight.

The competition in the DevOps toolchain is fierce, and flourishing; events and conferences are filled with the latest technology and best practices session. Blogs, books, and videos are flooding email inboxes, with thought leaders are eager to share their expertise. Moreover, more open-source tools are emerging from integrating some of the new technologies.

To survive the challenge of complexity, it is becoming increasingly essential to have an automation strategy. As you work to develop this, don’t lose sight of the actual issue you are trying to solve and how you can get there by using your own teams.

- Security champion programs form the bedrock of DevSecOps

One of the most popular DevOps predictions is that there is a growing need for organizations to shift security left in the development lifecycle by making that part of a comprehensive DevSecOps strategy. At a practical level, one of the most prevalent tactics people will use to execute on DevSecOps in large organizations will be increasing more security champions programs.

- Introduction of Low-code Tools

Even in the current times, DevOps automation helps in streamlining the processes relating to the CI/CD. Developers have to define the essential part of the pipeline with the YAML files, job specifications, and some other intensive tasks.

It is known that DevOps primarily lay emphasis on accelerating the entire process. Thus one can expect that one of the significant trends in 2020 would be the introduction of low code tools. The low code tools will play a major role as they will help to define the pipeline with a mere point and click UI. The new tool will assist enterprise software development services to make optimum use of technologies. It will help in creating as well as maintaining pipelines, policies, and helm charts.

- Kubernetes

One of the chief DevOps latest trends revolves around Kubernetes. The portable and reliable open-source system was extensively used in the year 2019. Since its inception in the year 2015, it has gained a fair share of attention in the DevOps arena. This year saw the growth of some of the core Kubernetes APIs. In fact, its adoption is still growing today. It is likely to continue in the future as well.

RECENT POSTS

-

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR -

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR -

Emerge 2020 HR Tech Summit12 Jul 2020 PR

Emerge 2020 HR Tech Summit12 Jul 2020 PR -

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles -

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

Best Tools for Cloud Infrastructure Automation

Best Tools for Cloud Infrastructure Automation

The integration of Development and Operations brings up with a new perspective to software development. If you are new to the DevOps practices or looking to improve your current processes, then it can be a challenging task to know which tool is best for your team.

So, let’s take a look at the best DevOps Infrastructure Automation Tools, from automated build tools to application performance monitoring platforms.

- Ansible

Ansible automates a range of IT aspects, building configuration management, cloud provisioning, and application deployment. It is focused on the way various systems of your IT infrastructure interact with each other rather than managing a component at a time.

Ansible can be easily managed using a web interface called by the name as Ansible Tower. The tool offers a range of pricing models where users can choose from a basic, standard, or premium package and gets a custom quote for the features they use.

- Datadog

Datadog is primarily a monitoring tool for cloud applications. It offers detailed metrics for all your cloud applications, servers, and networks as well. It can be easily integrated with some other automation tools such as Chef, Puppet, Ansible, etc.

Datadog can help in quick detection and troubleshooting of problems within the system. Thus, it is quite a time and cost-efficient solution for managing your cloud infrastructure.

- Puppet

Puppet is an Infrastructure as a Code (IaaC) tool that lets users define the desired state of their infrastructure and automate the systems to achieve the same.

It monitors all your system and prevents any deviation from the defined state. Starting from simple workflow automation to infrastructure configuration and compliance, Puppet can do it all. The open-source tools are free, while the enterprise model for more than ten nodes is chargeable. Companies can get custom pricing quotes based on their requirements.

It is a tool preferred by companies such as Google and Dell; Puppet is the perfect tool to maintain consistency in the system while maximizing productivity.

- Selenium

Primarily created for testing web applications, Selenium is a robust tool for automating web browsers. It is the best tool in the market for web app testing and management. Companies can easily create quick bug fixing scripts as well as develop automated, regressive bug fixing mechanism with Selenium.

Selenium combines software, with each serving a different purpose: Selenium Integrated Development Environment (IDE), Selenium Remote Control (RC), Selenium WebDriver, and Selenium Grid. It is free, open-source software that can be sponsored under the standard Apache 2.0 license.

It is quite easy to use and install and offers support for extensions as well.

- Docker

Docker is a tool that focuses on continuous integration and deployment of code. Developers can easily create and manage applications using Dockerfiles.

DockerFIles enables application management in isolated environments, including system files, code, libraries, and other functions. Hence, it is highly preferred by companies engaged in multi-cloud and hybrid computing.

Docker helps to save up a lot of time and resources while enhancing the productivity of the system and can also be easily integrated with existing systems.

- Cisco Intelligent Automation for Cloud

Cisco provides with a number of cloud offerings, from private to public to hybrid solutions. Apart from these offerings, Cisco intelligent Automation for cloud gives everything from infrastructure as a service (IaaS) to hands-on provisioning and management of instances running in the Cisco cloud or in other cloud environments, including the VMware, OpenStack, and AWS.

Some of its excellent features include a self-service portal for users of your cloud, multi-tenancy and network service automation. Though created with Cisco’s own cloud infrastructure offerings in mind, Cisco Intelligent Automation for Cloud benefits from being able to extend the automation tooling into other ecosystems.

- Vagrant

Vagrant is an excellent tool for configuring virtual machines for a development environment. Vagrant runs on the top of the Virtual machines like VirtualBox, VMware, Hyper-V, etc. It uses a configuration file known as a VagrantFile, which contains all the configurations needed for the VM. Once a virtual machine is created, then it can be shared with other developers to have the same development environment. Vagrant has lots of plugins available for cloud provisioning, configuration management tools, and Docker.

RECENT POSTS

-

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR -

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR -

Emerge 2020 HR Tech Summit12 Jul 2020 PR

Emerge 2020 HR Tech Summit12 Jul 2020 PR -

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles -

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

7 Reasons you need workflow automation

7 Reasons you need workflow automation

Don’t you want to get the most out of your automating workflows software? If your workflow software is agile enough, then you can use it for far more than the typical HR and document management tasks that so companies use it for.

Automating workflows are not just about doing things faster and easier but also doing things better. Well, designed workflows can help to reduce errors and collect valuable data along the way – data that can be used for better budget planning, more effective customer tracking, and even faster recovery when disaster strikes. So, here are the 7 automating workflows use cases:

- Automatic Approval or Eligibility Evaluation

Do small purchase orders really need departmental approval? If not, your purchase order workflow can be made to automatically evaluate conditions to make sure that only purchases greater than a certain threshold amount be sent for the departmental approval. And should use try to game the system by submitting a series of just under the threshold purchases; the system can evaluate the number of purchase orders started by the same person with a defined time period and proceed accordingly.

- IT Support Tickets

Maybe your IT team consists of a single person hired to make sure that your computers and devices all work as intended. Even so, with the help of using workflow software to create an IT ticket system with online forms for users to describe their problems is smart. Not only are there no chances of losing requests, but your IT person can also collect valuable data and can help inform the future IT purchases or even make the business use case for hiring an additional IT worker.

- Submission of Tweets to Your Social Media Manager

If you have a social media team, it is often best to have a single person in charge of posting social media messages, Tweets, and the Like. A simple online form submitting Tweets to the social media managers makes sure that all of them meet specifications and that they are posted strategically at the time when they are likeliest to receive the attention they deserve. Such forms can also be valuable as should an old social media channel post need to be retrieved or taken down later on.

- A Disaster Recovery Workflow

Let’s suppose that your company experiences a natural disaster, break-in, or data breach. It is so much surprising and too difficult to know what to do first. But establishing a disaster recovery workflow can help. Such a workflow can walk users through essential steps, like bringing in extra staff, calling authorities, and contacting insurers. Too many businesses put disaster recovery on a back burner and are then caught out once something actually really happens.

- Managing Accounts Receivable

An automated workflow that flags AR items that are more than 30 days old can make sure that no accounts slip through the cracks and end up being written off. For example, when an account reaches the 30-days threshold, a reminder can be sent to an AR team member to contact the customer by email. When an account reaches a 60- or 90-days threshold, the workflow can prompt a team member to follow up by phone or certified mail in an attempt to collect all the money owed to you.

- Communication in distributed systems

Distributed systems become the new normal in Information Technology. Distributed Systems are complicated because of the eight fallacies of distributed computing. Most developers are not yet aware of the magnitude of changes coming due to the fact that remote communication is unreliable, that faults have to be accepted and that you exchange your transactional guarantees with eventual consistency.

Orchestration

Modern architectures are all about decomposition that is into server-less or microservices functions. When you have many small components doing one thing well, you are forced to connect the dots to implement the real use cases. This is where orchestration plays a big role. It basically allows the invoking components in a certain sequence.

RECENT POSTS

-

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR

Clavent’s Emerge 2020 Martech Summit Goes Virtual, powered by CleverTap08 Sep 2020 PR -

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR

Emerge 2020 HR Tech Summit Concluded Successfully12 Jul 2020 PR -

Emerge 2020 HR Tech Summit12 Jul 2020 PR

Emerge 2020 HR Tech Summit12 Jul 2020 PR -

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles

My Experience Attending "How to Build High Performing Teams?" - Workshop On How to be a Good Manager.12 May 2020 Authored Articles -

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles

Top Quantum Computing Companies to look out for in 202029 Apr 2020 Listicles